Introduction

In this section, we will cover statistical methods used for evaluating data and validating hypotheses. Knowing the different types of statistical tests to perform will be critical for your own dataset.

Learning Objectives

- Explain the purpose of performing statistical tests.

- Identify what kind of data types to use for statistical tests.

- Create a new working git branch with statistical tests

What is a statistical test?

Statistical methods are used to interpret the data. It can include data cleaning, transformation and finding the right models or methods to test.

Some statistical tests are meant to understand the distributions of data. Other types of statistical tests are meant to compare one distribution against another, or to see if there is a relationships between the dataset.

Types of Data that work best with different tests

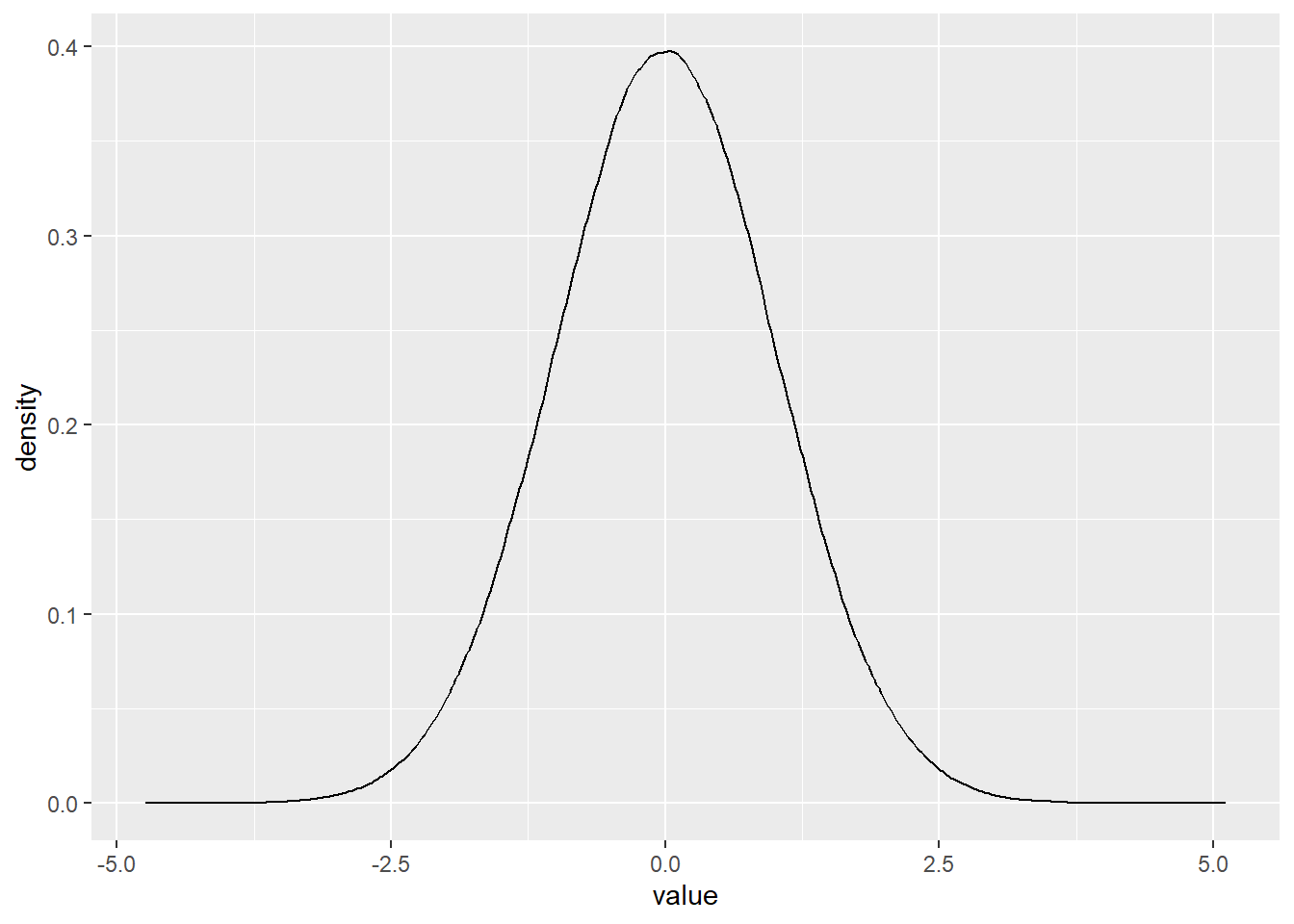

Different data formats have unique distributions, which are important to understand before performing any statistical tests. Tests like T-Tests work best with normal-style data, which has to be manually created. Also, there are tests that can convert data into a numerical result to run another test.

For this exercise we will discuss the common types of tests:

Normal test Normal test involves checking a statistical assumption. One of the most common checks is the normality assumption.

T-test Allows for 2 means to be compared. The most common type involves the use of numerical values to perform a comparison.

Pearson Test Determines how strong a relationship is between 2 distributions

Chi-squared Test: Use for evaluating the relationship between 2 categorical features.